Another, very educational, Build Day Live event completed, and I still find that each event feels different. The Build Day Live with Supermicro felt like I was learning a lot about the company and not so much about the specific product that we deployed. I already knew that Supermicro is a server vendor in their own category, not an all-encompassing behemoth like some of the other big vendors but not just an assembler of components like some lower cost vendors. I knew that Supermicro designs and manufacture their servers and that their engineering is top notch. What I didn’t realize was that the engineering and manufacturing happens in San Jose for products shipped to US customers. I didn’t know how good Supermicro is at re-using engineering across product families using modular designs. I also had no idea that there are a complete range of Supermicro data center network switches.

Link Dump

- Build Day Live landing page for Supermicro

- Supermicro Build Day Live playlist on YouTube

- Anthony Hook – Build Day Live with Supermicro

- Brett Johnson – Build Day Live! Supermicro – Big Twin

- Paul Braren – BigTwin unboxing

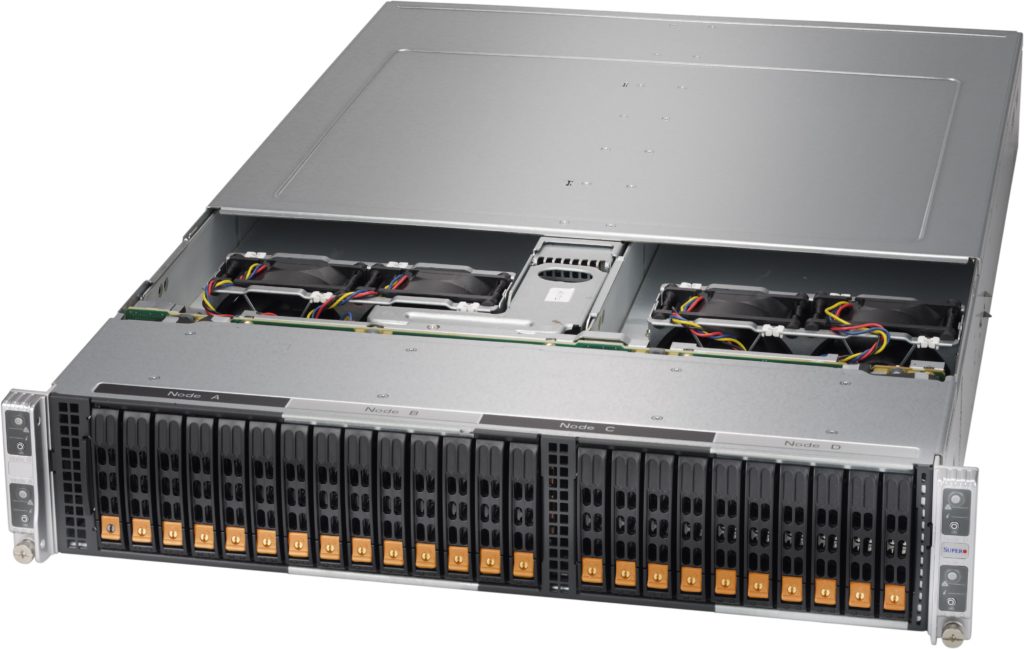

Every Build Day Live event has some element of deploying infrastructure, to show what customers experience. For the Supermicro event, we implemented a four node VSAN cluster and a single ESXi server to host a GPU accelerated desktop. The PowerCLI script that I used to configure the nodes and build the cluster is here in the brand new Build Day Live repository on GitHub in case you want to reuse any of this script. Because I already have full ESXi build automation from AutoLab, the VSAN deployment was a bit anti-climactic. It is a good thing that deploying VSAN on a Supermicro Big Twin is a routine activity because production storage and hypervisors should be boring. Even using Intel Optane drives for the performance tier in the all-flash VSAN configuration didn’t cause any issues, although it made for impressive VSAN performance. The only complicated part was creating the ESXi install ISO with the right NIC driver. I followed the process that Vladan outlines on his excellent blog then used the resulting ISO to populate the AutoLab PXE deployment folders. I should also have added the Intel NVMe driver to the ISO, that enables hotplug for the NVMe drives.

The engineering at Supermicro is impressive. There are unusual features like servers with a modular LAN interface on the motherboard. The Big Twin nodes we used had dual 10Gbe SFP+ ports but could equally have had four ports of SFP+, 10Gbe RJ45 ports, a variety of InfiniBand or Omni-Path interfaces. The same modular network interfaces could have gone in mainstream 1U or 2U enterprise servers, storage servers or similar rackmount systems. This re-use allows each module and driver to be optimized once and reused across the product range, rather like an add-on network card but using the built-in network features of the Intel Xeon CPUs.

The Supermicro data center network portfolio is pretty impressive, from 1Gbe all the way up to 100Gbe switches. The range was created due to customer demand; large data center customers wanted leaf-spine networking equipment from the same vendor as the racks of compute and storage servers. Most of the products come with a Supermicro operating system and management interface, but there are open switches which are certified with Cumulus Linux for customers that need an open Software Defined Network (SDN) platform.

Hopefully, we will return to Supermicro for some more Build Day Live events. I would like to see a scale-out Software-Defined Storage product deployed onto their storage servers, either all-flash or high capacity hybrid servers. I would be very interested in digging deeper into the rack scale architecture that was mentioned a couple of times; composability seems to be a significant trend in getting public cloud like agility on-premises. I would also like to see what deployment is like with the high-density blades that we saw, maybe with a hypervisor and VDI, or perhaps a Kubernetes bare metal deployment. We also got some tantalizing mentions of AI/ML products that Intel and Supermicro are working on together, maybe we can show some real business use of machine learning.