This guest post is by Steven Kang who blogs at ssbkang.com, where you can find his back catalogue of posts. Find out more about the guest blogger program here.

INTRODUCTION

While upgrading ESXi 5.0 servers to 5.5 , I faced an issue that a bunch of virtual machines weren’t able to be vMotioned while entering maintenance mode.

In this blog post, I would like to share with you how I went through the issue and found the solution.

SYMPTOM

Initial symptom was that whenever I execute vMotion with a several virtual machines, it stopped at 14% saying:

“A specified parameter was not correct”

INVESTIGATION

Before diving into this issue, let us be more specific. There were 4 ESXi servers all with version 5.0 no update and I will call them:

- ESXiA

- ESXiB

- ESXiC

- ESXiD

There were a number of virtual machines had this issue but I picked one virtual machine, VMA which was registered on ESXiA. With this virtual machine:

- vMotion to ESXiC & ESXiD worked

- vMotion to ESXiB didn’t

Re-visiting the symptom above, vMotion stopped at 14% which means that ESXiA was about to start transferring memory state and it failed.

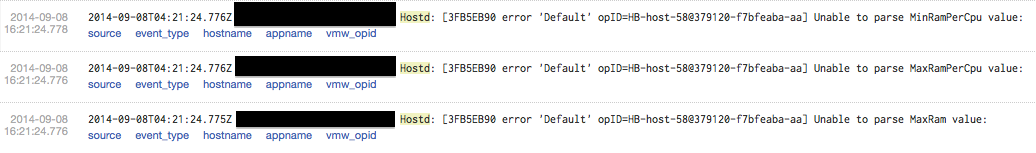

First investigation was made on VMKernel log to figure out why memory transfer has aborted, however, it didn’t indicate anything. Next log I took a look was the hostd.log and one line I found was the following:

The error message seemed like the issue was related. But looking at this VMware KB, since the ESXi server was 5.0 with no update, this was a known issue that could have been ignored.

While looking at the virtual machine configuration, one odd thing was that the virtual machine wasn’t showing correct swapfile datastore. Since our environment uses dedicated VMFS volumes for swap files, normally, it should have shown on the summary tab:

- datastore1

- datastore2

- swap_datastore1

But it didn’t show swap_datastore1. As swap file moves with virtual machine configuration files, I tried to storage vMotion configuration files to another datastore and gotcha, it started showing up all datastores correctly. However, vMotion failed again with the same error message and guess what, swap_datastore1 disappeared again. This proved that when ESXi tried to transfer swap file to another dedicated swap datastore and it failed.

Next step taken was to see what was going on with ESXi server by connecting to it directly using vSphere client. Surprisingly, there were a lot of pending jobs of:

“Register virtual machine”

SOLUTION

To fix this problem, it was required to clear up all queued jobs on this ESXi server.

First attempt made was to cancel the jobs by

- right click

- Cancel

But the cancel was greyed out. As I wasn’t sure which service does registering virtual machine job, I restarted management services by running “services.sh restart”. The connection to ESXi server was disconnected and after logging back into it, jobs were cleared. Re-tried vMotion and the issue was fixed.

WRAP-UP

To summarise the issue and soluion:

- vMotion stopped at 14% saying A specified parameter was not correct

- Restarted management services on all ESXi servers to clear jobs

Surprising thing found was that the very first job queued was for more than a month (not shown on the screenshot above). I suspect that the issue happened when:

- Virtual machines weres being vMotioned to other ESXi servers by DRS

- During this, there was a storage outage that affected all swap file VMFS volumes (yes… there was one)

- Hence, the jobs started hanging as ESXi couldn’t find appropriate swap VMFS volumes

Hope this helps to those of you are facing the same issue.