This guest post is by Rob Nelson who blogs at http://rnelson0.com/, where you can find his back catalogue of posts. Find out more about the guest blogger program here.

One of the most important concepts of The Goal is to increase throughput. Throughput is the rate at which the system generates money through sales. That is, when your company takes raw materials, processes them into a finished good, and sells it, the measured rate of that activity is your throughput. Severe emphasis on sales. Throughput is not the same as efficiency. Today, we will look at throughput vs. efficiency and how these concepts apply to IT.

Though we are focusing on throughput, we must state the descriptions of the two other measurements. Inventory is all the money that the system has invested in purchasing things which it intends to sell. Operational expense is all the money the system spends in order to turn inventory into throughput. I list the three definitions together because the definitions are precise and interconnected. Changing even a single word in one requires the other two be adjusted as well.

Another important concept in throughput is that it measures the entire system, not a locality. Whether you work in your garage or in a giant auto plant, you can not measure throughput locally, it must be measured over the entire system. This conflicts with most companies’ measurements of local efficiency. Employers naturally want to keep all their employees busy and employees like to see their coworkers pull their own weight. Why should Jane get to twiddle her thumbs at the Fob machine when Jill is busy pushing pallets of Fob parts around the floor? Is it fair to George to watch Jeff read the newspaper while he has to investigate hundreds of parts for quality control? And shouldn’t Jane and Jeff be worried that they might be reprimanded or fired for not being efficient, or draw the ire of their coworkers?

A Plant Floor

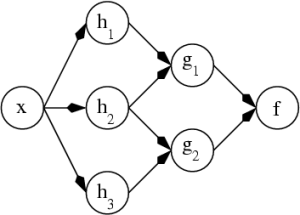

What is a manufacturing plant? There are many ways to describe one, but at the high level view we will use, it is an interconnected dependency graph. A small plant might look like the graph below, while larger plants can be, well,confusing. In our small plant, X is where parts are received and F is where they are assembled. The H and G workstations are where raw or partially-processed goods are received and processed before being sent onward.

Lots of parts are received at station X all day long. A truck arrives, a team unloads its contents, and three teams of runners bring those parts to stations H1, H2, and H3. These people are pretty much always busy – the runners are moving parts from the last truck while the unloaders are working on the current truck, and the plant manager does a great job of making sure there’s a constant stream of trucks arriving. By nearly any measurement, these people are efficient.

The teams at stations H1-H3 are very busy, but not all the time. On average, H1 receives 100 parts/hr, H2 gets 30/hr, and H3 gets 200/hr. That’s the rough ratio of parts required to assemble your product (10:3:20) and the capacity of each machine. Sometimes it’s a bit more or less of a part, as the supplier packs those trucks, which means that sometimes H1 only gets 50/hr, and every once in a while it gets 200/hr to help average things out. So, are these teams efficienct? There are two ways to measure that. We’ll look at station H1.

Common Sense

Conventional efficiency measurements vary slightly but boil down to some form of, “Are the people at station H1 not sitting around?” That’s just common sense, right? They get unfinished parts constantly and send their partially finished parts to G1. The capacity of the machine at H1 is 100 parts per hour, so when the correct amount, 100 parts, shows up in an hour, the last finished part is coming out of the machine when the next batch of parts arrives. The team has been busy from 9am till lunch time, loading the machine, flipping levers, and pushing buttons to turn 300 raw parts into 300 parts for G1. That sounds efficient to me.

What happens when a smaller batch of 50 parts arrives at H1 after lunch? The team loads the machine at 1pm and at 1:30pm the last part is finished. Fred, Martha, and Tom push the pallet of parts over to G1, rather than waiting for runners, but it’s only 1:35pm when they get back to their machine. No parts will arrive till 2pm, so Fred pulls out a deck of cards and they start playing Go Fish for 25 minutes. The team suddenly does not seem very efficient, producing only 50% of what they are capable of.

The next hour, 200 parts arrive – it has to at some point, if the 100 parts per hour average is to be maintained. The cards disappear like ghosts and the team gives an exasperated look at the overloaded pallets before getting to work on them. At 3pm, another 100 parts arrive, which join 100 parts still sitting there from the last batch. Again, the team has unfinished 200 parts and a machine that can only handle 100 parts an hour. For the rest of the day, 100 parts arrive on each truck. When everyone goes home at 5pm, they don’t even remember a relaxed card game after lunch, they just remember being REALLY “efficient” for the last three hours, trying and never getting caught up. Unfortunately, the bean counters see that H1 was 50 parts short of quota for the shift, so Fred, Martha, and Tom are all considered to be lazy workers for the day. All that hard work and yet they’re still not efficient.

Enlightened Sense

Warning: Math ahead! I’ll make it worth your while, I promise.

Let’s review the day through the eyes of The Goal. Remember, we don’t care about local efficiencies, we’re looking at overall system throughput, even if we are constrained by local capacity From 9am-noon, every pallet that came in was processed and released promptly – locally efficient. From 1pm-5pm, 450 parts came in and only 350 parts went out – not as locally efficient, only 77% (350 / 450 parts arrived) or 87% (350 / 400 parts capacity) efficient. According to the goal, the H1 team was still contributing effectively. The employer shouldn’t view the H1 team negatively. Why are the two views so different?

Let’s look at the throughput definition once more: Throughput is the rate at which the system generates money through sales. Again, the keyword is sales. When Fred pulled out his deck of cards, most people might think, he needs to get back to work! Why? What can Fred do, at the H1 station, to affect sales? If they don’t play Go Fish, will the company’s bank balance go up? Unless Fred can wish 50 spare parts out of thin air, it’s unlikely. H1′s crew did everything they could to contribute to throughput.

We need to fast forward to 5pm to understand this better. If the parts shipments had been ideal, 100 parts/hour, all the unfinished parts taken from the truck would be partially finished parts sent to G1. The next morning, H1 would have gotten 100 new parts from the truck and started over. Instead, they ended the day with a full pallet of 100 parts. When they get in tomorrow, the runners from the truck will drop off another 100 parts right away, so they’ll be in the same hole as before, 200 parts. If they get a few more batches of 50 parts, or even a batch of 0 parts, they’ll have a chance to catch up. And they’ll need to, because eventually they’ll get another batch of 200 parts which will set them back even further.

Every time this happens, inventory goes up! How did we describe inventory? Inventory is all the money that the system has invested in purchasing things which it intends to sell. Those 100 parts sitting on the floor overnight or between shifts is money tied up in something that can’t be sold yet, hence, inventory. Let’s say that Jeff did pull 50 parts out of thin air (or more likely found in a storeroom somewhere) at 1pm. 100 parts instead of 50 would have been sent through, but at the end of the day, the 200 parts at 2pm would still result in 100 parts sitting on floor in front of H1.

Here’s the real kicker, though – how do local efficiencies affect throughput? What’s the capacity of the machine at G1? How many H1 parts can it handle an hour? If it’s less than 100, inventory starts accumulating in front of it every hour. Maybe it breaks down for a bit and starts accumulating more parts? What if H2, which receives 30 parts/hr on average but can process 50 parts/hr, has a few hours of higher-than-average output? All the parts from H1 and H2 become inventory, not throughput. The whole time, the team at F (assembly) can’t put together any Fobs to sell. They are only receiving finished parts from G2, so there’s nothing to assemble and many games of Go Fish are played.

One of the worst outcomes is that H1 is deemed inefficient and steps are taken to remedy this. When the original truck is short of parts for H1, another truck is sent with the remainder, just for that hour. H1 gets the 50 parts they need and operational expenses have gone up in addition to inventory. The guys at F are still sitting around when H2 and H3 are shorted parts, so the plant manager arranges for extra trucks to help stations H2 and H3 always stay busy, so there’s more inventory at G1 and G2, and when G1 goes on the fritz again, H1 and H2 are both piling up inventory in front of it – very “efficiently”! However, each station can still only go so fast, so throughput has actually gone down because the rate at which the system is generating money is going down – it’s all tied up in inventory!

Unless the plant manager read The Goal. They realize that H1′s efficiency numbers aren’t harming the throughput or contributing to increased inventory and no black marks go in personnel files.

There’s a bit of math that goes into the dependency graph, but it shows us how focusing on local efficiency vs. overall system throughput can be shortsighted. Common sense, indeed.

Applying the lesson to IT

How does an enlightened IT manager apply what they’ve learned from manufacturing plants? How can we take the concepts of throughput and efficiency and apply them to our jobs? While IT does not always have the concept of physical inventory to slow us down, we can definitely relate to a concept of throughput. The difference is that our stations are not steel presses and furnaces, but people, like Jennifer and Joe, departments and organizations, like development and quality assurance, and customers.

Just like each station in a plant, each person in IT has a maximum capacity. Jennifer can only work so fast, taking 20 customer issues a day and turning them into partially finished goods for Joe to process. Joe is able to complete work on 18 issues a day, so when Jennifer is operating at maximum (local) efficiency, Joe sees a growing pile of tickets he can’t handle. There’s some inventory piling up, but if Jennifer slows down just a bit, there’s no accumulation. Joe will still get products out at the same rate. Jennifer might be only 90% efficient, but throughput is maintained without increasing inventory.

IT work, much like a plant, is rarely that simple. To turn a customer request into a finished product and sell it usually involves more than 2 people. Someone finds a customer to buy the product, the project manager drives the potential sale to completion, Jennifer and Joe develop the product, QA ensures it’s a good product, and Operations delivers and implements the product. In addition, there’s a senior technical resource who Jennifer and Joe can engage as needed when they run into issues. This probably sounds familiar to everyone.

We’ve already determined that Jennifer and Joe can be maintain throughput. What about sales? If sales are too rapid, inventory now starts piling up in front of Jennifer, so she starts working faster, putting some of the inventory in front of Joe. Joe was already going as fast as possible, so QA and Operations still gets product at the same rate. Inventory is now accumulating all over the place and product isn’t being sold any faster. Meanwhile, the senior resource isn’t ever engaged because Jennifer and Joe don’t have time to “waste” engaging the senior because they’re constantly under the gun. “If they don’t deliver, our reputation with customers will be shot and no-one will want our product anymore!” exclaims Sales. Common sense tells us that QA, Implementation, and the Senior are efficient. That doesn’t matter since throughput is down – a ton of effort and money has been expended to generate potential sales, but the actual sale at the end isn’t happening faster. Good money has been thrown after bad money. So much for common sense!

Some readers may see a simple solution: hire more Jennifers and Joes! That is a great solution! Jennifer and Joe have been identified as bottlenecks. By widening that bottleneck with increased staff at that level, the bottleneck is reduced or eliminated (we’ll touch more on this in the future; in the meantime check out Eric Wright’s Quick Primer on the Theory of Constraints). This is a great solution if you meet two requirements. The demand for the product must be predicted to stay at an increased level. You wouldn’t want to fire the new people next month if demand goes down. You must also be able to hire competent workers at a reasonable rate.

If you cannot meet those two requirements, the solution is the counter-intuitive one: slow things down. By looking at throughput rather than local efficiencies, we have identified our bottleneck (Jennifer and Joe). When too many orders come in, they are overwhelmed, inventory goes up, and throughput goes down. If sales brings in fewer leads, project management sends less work to Jennifer, which means less inventory piling up for Joe. QA and Operations are unaffected and the Senior resource gets engaged more frequently as looming deadlines are lifted. Now, the system is more efficient. Throughput goes up and inventory goes down. Ignoring local efficiencies improves the bottom line.

The concept of throughput can be complex, but hopefully you can see how to apply the concept to fight “common sense” in IT. We have all been taught to judge ourselves and our coworkers based on local efficiencies. If we instead pay more attention the capacity of each “station” and how it fits into the pipeline that generates sales, we can focus ourselves on efforts that are actually productive.

This week at work, take a close look at how your productivity is measured. Does your company use common sense or an enlightened sense? I bet I know the answer, and I bet you can find some local efficiencies that aren’t helping to increase throughput. Armed with your new knowledge, you can start attacking those areas one by one. Be proactive, be a leader!